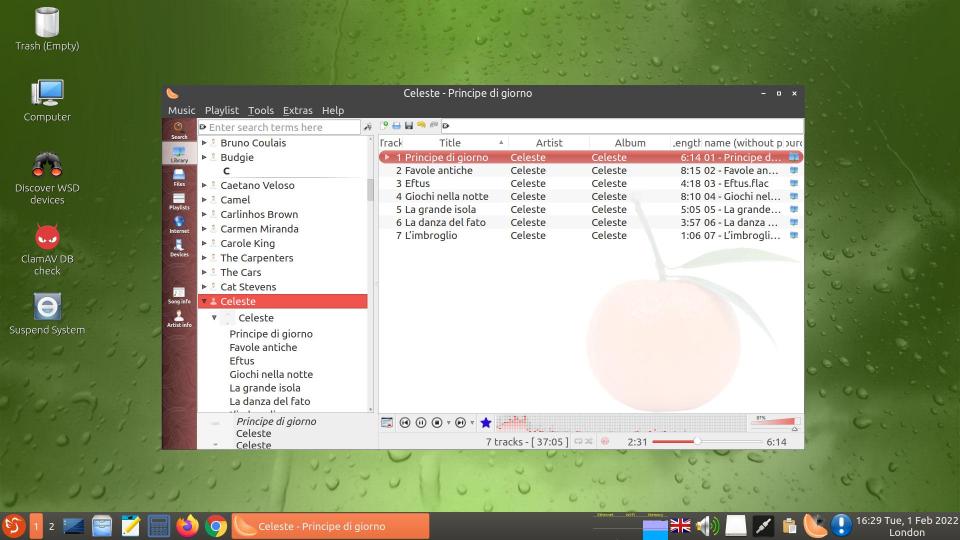

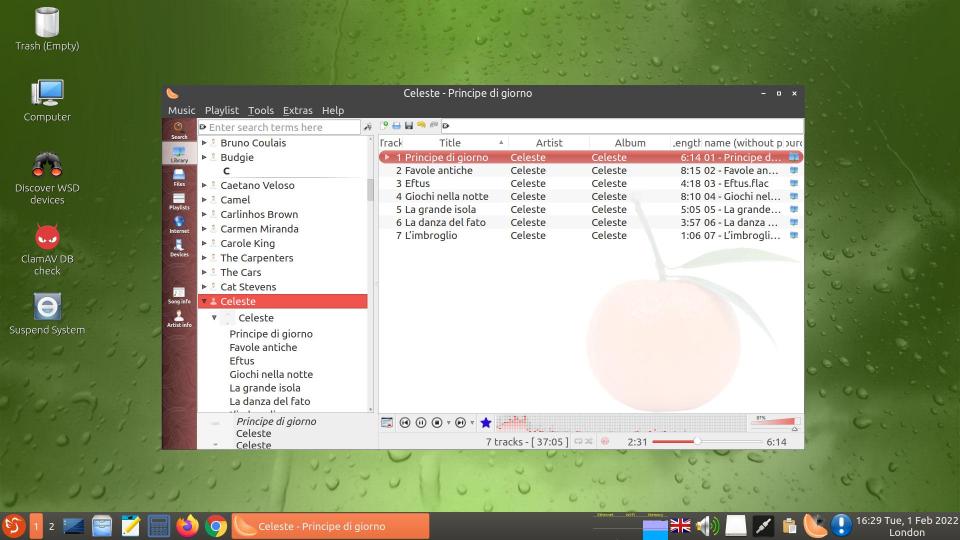

Clementine music player in GeckoLinux LXQt on my 43-inch TV screen.

Background/History

Back in early 2010, when nettops were the latest thing, I bought an ASRock ION 330HT nettop, billed as an ‘HTPC‘ (Home Theatre PC):

- CPU: Intel Atom 330 1.6GHz (Dual core)

- Memory: Supports DDR2 800MHz, 2 x SO-DIMM slots, default 2GB (2 x 1GB), maximum up to 4GB (due to the CPU limitation, the actual memory size available to the OS may be less than 4GB).

- Chipset: NVIDIA ION graphics processor

- Graphics: NVIDIA ION Graphics, supports DX10 / HD 1080p playback

- Audio: 7.1 CH HD Audio with DTS

- HDD: 320GB 2.5″ HDD, capable of supporting RAID 0 and 1 by adopting a second 2.5″ HDD

- ODD: DVD Super Multi

- LAN: Gigabit Ethernet

- WLAN: 802.11b/g/n Wi-Fi

- Rear I/O: 1 x HDMI, 1 x D-Sub VGA, 6 x USB 2.0, 1 x S/PDIF, 1 x powered eSATA/USB (For powered eSATA function, Hot Plug function is supported in RAID / AHCI mode only. IDE mode does not support Hot Plug function.)

- Remote Controller: MCE remote controller

- External Power Unit: 65W/19V adapter

- Firmware: PC BIOS (no UEFI)

In 2010 so-called smart TVs were not really that smart. I still had a large Sony Trinitron TV with a CRT, and I wanted to see if I could use the nettop with it. I bought a DVB-T USB adapter to enable the nettop to access digital terrestrial television, and I installed XBMC (now called KODI). I installed the now-defunct Sabayon Linux, and had a hell of a job getting ASRock’s CIR [Windows] MCE (Media Center Edition) remote to work. ASRock only released a driver (lirc_wb677) for the Nuvoton w836x7hg CIR chip in the nettop for Ubuntu 9.10, 10.04 and 10.10, and I had to patch it to get it to work with LIRC in Sabayon Linux. Later that year developer Jarrod Wilson released the first version of a new driver named nuvoton-cir for the Nuvoton w836x7hg chip, and in 2011 I had another struggle to get that working with LIRC and XBMC in Sabayon Linux.

To be able to use the DVB-T USB adapter I installed Tvheadend in Sabayon Linux, which worked well, although the adapter needed to be connected to the house TV aerial in order to provide good reception, i.e. the small indoor aerial supplied with the DVB-T adapter was next to useless.

I bought a VGA-to-Composite Video converter to connect the nettop’s D-Sub VGA socket to the TV’s composite video input. The Linux Desktop displayed on the CRT TV screen was OK-ish but, as you would expect, not comparable to the display on a TFT monitor.

Basically, I was not satisfied with the result, and the nettop went back into its box after very little use. I did get it out briefly in 2016 to upgrade the 2GB RAM (two 1GB modules) to the maximum allowable 4GB (two 2GB modules) in case I might want to use the nettop in future. With two 2GB RAM modules the nettop detects 3327MB of RAM, which limits what can be done with it.

When ‘proper’ smart TVs came onto the market, there was no longer any incentive to use an HTPC; everything and more that a nettop HTPC did could be done by a smart TV. In 2015 I succumbed and bought an LG smart TV, added a USB 1TB HDD, connected my DVD player to the TV and forgot about the nettop. The LG TV developed a fault three years later. I fixed it but its lack of catch-up TV apps for some of the main TV stations became irritating so, three years ago, I bought a new TV. The media player on the TV (a FINLUX TV) cannot play FLAC music files, and the Web browser is very slow with a buggy UI, so I began thinking about resurrecting the ASRock nettop in order to be able to browse the Web properly on my TV and to play my music flles through the TV’s sound bar. I finally got around to doing this recently, so here is the story…

Connections

I have a Rii i8 mini wireless keyboard which I used with my smart TV, so I connected its lead with USB wireless receiver pigtail and micro-USB charging plug pigtail to one of the USB ports on the back of the nettop. The lead is long enough to enable the USB wireless receiver (about the size of a USB Type A plug) to sit between the sound bar and the TV stand. The micro-USB charging plug pigtail lies out of sight on the TV stand behind the sound bar, ready to charge the mini keyboard when needed. Excellent wireless mini keyboard with touchpad, by the way.

The nettop is connected to the TV by an HDMI cable. The sound bar is connected to the TV by a 3.5mm jack plug cable, and connected to the nettop by an S/PDIF (optical) cable. I use the sound bar’s remote to switch easily between TV audio and nettop audio.

Finding a suitable Linux distribution

Given the limitations of the nettop’s CPU and memory, I wanted to install a distribution with a lightweight Desktop Environment. I like LXQt, so that would have been my choice if possible. Gentoo Linux is installed on my laptops, and Lubuntu 21.04 on my family’s desktop. LXQt is available for Gentoo Linux but I would not dream of installing Gentoo Linux on a relatively slow nettop with less than 4GB accessible, but Lubuntu seemed a good candidate. Therefore I created a Live USB pendrive with Lubuntu 21.10, which booted fine on all my other machines (including a legacy machine with PC BIOS only, not UEFI) but would not boot on the ASRock nettop. It would get as far as the GRUB menu then stall. So I tried Mageia (the Xfce release, as there is no Live LXQt release), but the result was similar. So then I tried PCLinuxOS (also the Xfce release, as there is no Live LXQt release), and that did install and run nicely (although the edges of the file manager’s windows were thick dashed lines). Everything worked well until I selected Suspend and tried to Resume, which resulted in the following messages on the screen and the nettop hung:

[ 1774.594461] IRQ 26: no longer affine to CPU1

[ 1774.602213] IRQ 16: no longer affine to CPU3

[ 1774.602227] IRQ 18: no longer affine to CPU3

[ 1774.613499] TSC synchronization [CPU#0 -> CPU#1]:

[ 1774.613504] Measured 377387956 cycles TSC warp between CPUs, turning off TSC clock.

[ 1774.613552] TSC found unstable after boot, most likely due to broken BIOS. Use 'tsc=unstable'.

[ 1774.609000] clocksource: Checking clocksource tsc synchronization from CPU 1 to CPUs 0.

[ 1774.609000] clocksource: CPUs 0 ahead of CPU 1 for clocksource tsc.

[ 1774.609000] clocksource: CPU 1 check durations 6592ns - 6592ns for clocksource tsc.

_

I could get rid of the clock-related messages by adding ‘tsc=unstable‘ to the kernel boot line in /boot/grub/grub.cfg, but I could not get rid of the ‘no longer affine’ messages and the hanging every time the nettop resumed from suspension. I wondered if the BIOS was to blame, so I downloaded onto a FAT32-formatted USB pendrive the latest version (1.2) of the 330HT BIOS from the ASRock Web site and installed it on the nettop (easy: press F6 at boot), but the problem remained. I began to wonder it any modern Linux release would work on this nettop.

So it was time to try another distribution. My searches on DistroWatch showed that GeckoLinux (“a Linux spin based on the openSUSE distribution, with a focus on polish and out-of-the-box usability on the desktop” according to its Web site) has static and rolling editions based on openSUSE Leap and openSUSE Tumbleweed respectively, and has many Desktop Environment releases, including LXQt. The availability of LXQt attracted my attention, but I was also curious to try openSUSE and the Btrfs file system. I did try openSUSE briefly many years ago (possibly more than a decade), but I have never used Btrfs. So I decided it was worth a shot.

I downloaded the latest available ISO for GeckoLinux ROLLING LXQt and used dd on one of my Linux machines to create a bootable USB pendrive:

user $ sudo blkid # Find out which device is the pen drive

user $ sudo dd if=/home/fitzcarraldo/Downloads/GeckoLinux_ROLLING_LXQt.x86_64-999.220105.0.iso of=/dev/sdd bs=4M status=progress && sync

I booted the pendrive on the nettop and launched the GeckoLinux installer, which had no trouble installing the OS on the nettop’s HDD. Further on I point out a couple of minor niggles I found with the application menu but, by and large, I find GeckoLinux Rolling LXQt provides a good, polished user interface and experience.

Setting up auto login and disabling a lock screen

LXQt Desktop in GeckoLinux LXQt on my 43-inch TV screen.

I found that, in order to get auto login working correctly in the installation, I needed to specify a user password during installation and then set up auto login after booting into the new installation:

‘Application Menu’ > ‘Preferences’ > ‘YaST User and Group Management’

- Select the user and click on ‘Expert Options’

- Select ‘Login Settings’

- Tick ‘Auto Login’

- Tick ‘Passwordless Logins’

One needs to be a little careful not to end up with both light-locker and XScreenSaver providing lock screens. I wanted only a screensaver and no locking of the user session after a period of inactivity. Any press of a key or tap of the touchpad on my Rii i8 mini wireless keyboard will simply stop the screensaver animation and then display the Desktop.

‘Application Menu’ > ‘Preferences’ > ‘LXQt Settings’ > ‘Session Settings’

- In ‘Basic Settings’, untick ‘Lock screen before suspending/hibernating’.

- In ‘Autostart’, ‘XScreenSaver’ under ‘LXQt Autostart’ needs to be ticked.

‘Application Menu’ > ‘Preferences’ > ‘Screensaver’

If a window appears informing you that the XScreenSaver daemon is not running and offering to launch it, click ‘OK’.

- Select ‘Mode: Only One Screen Saver’.

- Select a screensaver animation (I use ‘GL Matrix’).

‘Application Menu’ > ‘System Tools’ > ‘dconf Editor’

Configure the following settings for light-locker:

| idle-hint |

false |

| late-locking |

false |

| lock-after-screensaver |

0 |

| lock-on-lid |

false |

| lock-on-suspend |

false |

‘Application Menu’ > ‘Preferences’ > ‘LXQt Settings’ > ‘Power Management’

Untick ‘Enable Battery Watcher’, ‘Enable Lid Watcher’ and ‘Enable Idleness Watcher’ on the respective tabs.

Setting the hostname

I set a static hostname (I opted for ‘ion330ht’) by selecting ‘Application Menu’ > ‘Preferences’ > ‘YaST Network’ and entering the hostname on the ‘Hostname/DNS’ tab.

Package Management

Both the YaST Software Management GUI and the zypper command are new to me, so I still have a lot to learn.

The main package repositories were already added, but to learn how to add other repositories manually see the following articles:

Anyway, these are the repositories currently in use on this nettop:

ion330ht:/home/fitzcarraldo # zypper repos

Repository priorities in effect: (See 'zypper lr -P' for details)

90 (raised priority) : 1 repository

97 (raised priority) : 1 repository

98 (raised priority) : 2 repositories

99 (default priority) : 4 repositories

115 (lowered priority) : 4 repositories

# | Alias | Name | Enabled | GPG Check | Refresh

---+--------------------------------------+------------------------+---------+-----------+--------

1 | Google-chrome | Google-chrome | Yes | (r ) Yes | Yes

2 | Google-talkplugin | Google-talkplugin | Yes | (r ) Yes | Yes

3 | Nvidia | Nvidia | Yes | (r ) Yes | Yes

4 | Packman_Tumbleweed | Packman_Tumbleweed | Yes | (r ) Yes | Yes

5 | Tumbleweed_OSS | Tumbleweed_OSS | Yes | (r ) Yes | Yes

6 | Tumbleweed_OSS-updates | Tumbleweed_OSS-updates | Yes | (r ) Yes | Yes

7 | Tumbleweed_non-OSS | Tumbleweed_non-OSS | Yes | (r ) Yes | Yes

8 | http-download.opensuse.org-f6f93dd3 | openSUSE:Tumbleweed | Yes | (r ) Yes | Yes

9 | http-opensuse-guide.org-a78c9b99 | libdvdcss repository | Yes | (r ) Yes | Yes

10 | https-download.opensuse.org-96367b31 | network:im:signal | Yes | (r ) Yes | Yes

11 | https-download.opensuse.org-a5f414ff | openSUSE:Tumbleweed | Yes | (r ) Yes | Yes

12 | skype-stable | Skype-stable | Yes | ( ) No | Yes

Repositories 8 to 11 in the above list were added when I used ‘1 Cick Install’ on an openSUSE Software Web page for a specific package.

Most of what I needed was already installed, and I installed a few other packages using either the YaST Software Management GUI, the zypper command or ‘1 Click Install’:

● To be able to use the locate command to search for specific files:

mlocate

● To be able to configure the LXQt Keyboard State Indicator on the Panel to display the flag of the keyboard language:

iso-country-flags-png

● I was not sure if online updates would be advisable, but it looked potentially useful:

yast2-online-update-configuration

● Some Web sites are not displayed correctly in Firefox, and I use Google’s Chrome browser for those:

google-chrome-stable

● The Clementine music player (already installed) has the ability to display visualisations using projectM:

projectM

projectM-data

However, I could not get projectM to load its visualisation files, but I need to tinker more with it.

● I wanted to implement my scheme to scan automatically any files downloaded into the ~/Downloads/ directory (see my 2017 post), so I installed the following packages:

clamav

kdialog

inotify-tools

acl

(See further down for the addtional steps I took in order to get my scheme to work in GeckoLinux/openSUSE.)

● A GUI front-end to ClamAV in case I wanted to scan any files or directories manually:

clamtk

● Although not essential, I installed the package monitoring-plugins-clamav in case I wanted to use it to check if the virus signatures are current, although my Bash script in a 2021 post serves the same purpose.

● To provide the commands dig, host and nslookup in case I need them in future:

bind-utils

● To provide the man command and pages from the Linux Documentation Project:

man-pages

man

● To enable me to specify the window colour and size etc. in xterm, if I wish:

xtermset

● To provide a GUI utility to show the amount of used and unused space in each partition:

filelight

● Various multimedia codecs were already installed, but I had to install the package libdvdcss2 in order to be able to play commercial DVDs, as VLC would not play them. I installed it by using ‘1 Click Install’:

https://software.opensuse.org/package/libdvdcss2

● I use Signal Messenger, so I installed the package signal-messenger by using ‘1 Click Install’:

https://software.opensuse.org/package/signal-desktop

However, a subsequent rolling update flagged a dependency conflict requiring it to be uninstalled.

● To enable machines running Windows to browse SMB shares in File Explorer I installed the WS-Discovery daemon:

wsdd

● To be able to edit tags in my music files:

kid3-qt

● To be able to copy characters not available on the keyboard:

kcharselect

● To install ir-keytable, *.toml files and 70-infrared.rules so that I could try to get the ASRock CIR MCE remote working using the in-kernel support for IR decoders, instead of LIRC:

v4l-utils

● I no longer use KODI but I wanted to see if I could get the ASRock CIR MCE remote to control it using the in-kernel support for IR decoders instead of LIRC:

kodi

● I prefer SMPlayer to VLC (which came installed in GeckoLinux Rolling LXQt):

smplayer

● To be able to edit .mkv files, e.g. to change the default audio language etc.:

mkvtoolnix

mkvtoolnix-gui

Web Service Discovery host daemon (wsdd)

Having installed the package wsdd by using ‘Preferences’ > ‘YaST Software Management’ I performed the following steps as root user:

ion330ht:/home/fitzcarraldo # systemctl enable wsdd

I edited /etc/systemd/system/multi-user.target.wants/wsdd.service and added ‘--workgroup=HOME‘ to the ExecStart line, as my Windows workgroup is HOME rather than WORKGROUP:

ExecStart=/usr/sbin/wsdd --shortlog --workgroup=HOME -c /run/wsdd $WSDD_ARGS

ion330ht:/home/fitzcarraldo # systemctl daemon-reload

ion330ht:/home/fitzcarraldo # systemctl start wsdd

Although not necessary (and nothing to do with wsdd on the nettop), I performed the steps given in my 2020 blog post ‘A Linux command-line utility to discover and list WSD-enabled computers and printers on a home network‘. Works a treat.

SMB

This SMB configuration is for my home network that uses Broadcast NetBIOS Name Resolution, SMB and WS-Discovery. See the following posts (and all the comments on each, some of which contain important updates) for an explanation of how I set these up, making it relatively straightforward to add a device that uses the SMB protocol and enable it to browse shares on the other machines, and vice versa.

Note also that the smb, nmb and wsdd services must be running (see the next section).

I used the command ‘ip address‘ to find out the names of the wired and wireless interfaces, then I edited the file /etc/samba/smb.conf to contain the following (the Workgroup name in my home network is ‘HOME’ rather than the usual default of ‘WORKGROUP’):

[global]

;no need to specify 'smb ports' as ports 139 & 445 used by default

workgroup = HOME

netbios name = ion330ht

case sensitive = no

browseable = yes

;If this machine becomes a Master Browser, the following parameter allows it to hold the browse list

browse list = yes

printcap name = cups

printing = cups

log file = /var/log/samba/log.%m

max log size = 50

security = user

map to guest = bad user

encrypt passwords = yes

passdb backend = tdbsam

domain master = no

local master = yes

preferred master = yes

name resolve order = bcast

dns proxy = no

;Listen for NetBIOS on Ethernet and Wireless interfaces

;Names of the interfaces found using ifconfig command

interfaces = enp0s10 wlp2s0

server string = Samba Server on ion330ht

log level = 2

[netlogon]

comment = Network Logon Service

path = /var/lib/samba/netlogon

guest ok = yes

[printers]

comment = All Printers

path = /var/spool/samba

guest ok = yes

printable = yes

create mask = 0700

[print$]

path = /var/lib/samba/printers

write list = @adm root

guest ok = yes

[fitzcarraldo]

path = /home/fitzcarraldo/Public-fitzcarraldo

comment = To pass files to and from ion330ht

browseable = yes

public = yes

writable = yes

valid users = fitzcarraldo

I used the command ‘smbpasswd -a fitzcarraldo‘ to specify my SMB password, which has to be the same as my Linux password.

Starting Services

‘Application Menu’ > ‘Preferences’ > ‘YaST Services Manager’

In addition to any services already configured to start ‘On Boot’, make sure the following are set to start ‘On Boot’:

- clamd

- cups

- nmb

- ntpd

- smb

- wsdd

User’s Locale

Even though I had configured during installation (and confirmed after installation using YaST) the language, keyboard language and location as British English and Europe/London respectively, the dates of files displayed by PCManFM-Qt were still in US format. I added the following lines to the file ~/.profile to fix that:

export LANG="en_GB.UTF-8"

export LC_CTYPE="en_GB.UTF-8"

export LC_NUMERIC="en_GB.UTF-8"

export LC_TIME="en_GB.UTF-8"

export LC_COLLATE="en_GB.UTF-8"

export LC_MONETARY="en_GB.UTF-8"

export LC_MESSAGES="en_GB.UTF-8"

export LC_PAPER="en_GB.UTF-8"

export LC_NAME="en_GB.UTF-8"

export LC_ADDRESS="en_GB.UTF-8"

export LC_TELEPHONE="en_GB.UTF-8"

export LC_MEASUREMENT="en_GB.UTF-8"

export LC_IDENTIFICATION="en_GB.UTF-8"

export LC_ALL=""

Susequently I discovered that the file /etc/sysconfig/language contains variables that I probably could have edited manually to achieve the same thing for users’ accounts.

GUI Appearance

As I am sitting on a sofa viewing the TV screen from a distance, text and icons have to be larger than on a normal desktop or laptop machine. This was easy enough to configure.

I right-clicked on the LXQt Panel and selected ‘Configure Panel’ to increase the height of the Panel and the size of the Panel icons and Panel font. I selected ‘Preferences’ > ‘LXQt Settings’ > ‘Appearance’ to increase the size of the icons and font in the rest of the UI, to change the icon theme to Oxygen, and to change the mouse cursor size to 50. I selected ‘Preferences’ > ‘LXQt Settings’ > ‘Desktop’ (or right-click on the Desktop and select ‘Desktop Preferences’) to increase the icon size and font size on the Desktop.

I increased the font size of the Firefox address bar, bookmarks toolbar, tabs and page using the two methods (devp and userChrome.css) described on the following Mozilla Support page: Text size of menus and tool bars way too small. I want to be able to increase the size.

Firefox font size on my 43-inch TV screen.

ClamAV virus signatures database was not being updated

The ClamAV signatures database was not being updated automatically after I installed ClamAV, so I needed to fix that.

Using the following commands listed on the openSUSE Wiki page for ClamAV did not work, because there is no freshclam.service file:

fitzcarraldo@ion330ht:~> sudo systemctl start freshclam

fitzcarraldo@ion330ht:~> sudo systemctl enable freshclam

I suspected that GeckoLinux/openSUSE Tumbleweed uses systemd timers instead of cron, and indeed I found a timer file for freshclam:

fitzcarraldo@ion330ht:~> locate timer | grep fresh

/usr/lib/systemd/system/freshclam.timer

fitzcarraldo@ion330ht:~> cat /usr/lib/systemd/system/freshclam.timer

[Unit]

Description=Timer for freshclam virus definitions downloader

[Timer]

OnBootSec=5m

OnUnitActiveSec=2h

Persistent=true

[Install]

WantedBy=timers.target

I enabled it as follows:

fitzcarraldo@ion330ht:~> sudo systemctl enable freshclam.timer

[sudo] password for root:

Created symlink /etc/systemd/system/timers.target.wants/freshclam.timer → /usr/lib/systemd/system/freshclam.timer.

fitzcarraldo@ion330ht:~> sudo systemctl start freshclam.timer

fitzcarraldo@ion330ht:~> sudo systemctl is-active freshclam.timer

active

systemd now runs freshclam 5 minutes after the machine boots and every 2 hours thereafter.

Automatic scanning for viruses in the Downloads directory

See my 2017 blog post Using the ClamAV daemon to scan files placed in my Downloads directory in Gentoo Linux, which I have implemented successfully on machines running Gentoo Linux and Lubuntu. However, in GeckoLinux it took a bit more effort to get the scheme working.

GeckoLinux Rolling (and, I assume, also openSUSE Tumbleweed) allocates clamav to a user named ‘vscan‘ and a group named ‘vscan‘ instead of a user named ‘clamav‘ and a group named ‘clamav‘.

fitzcarraldo@ion330ht:~> ls -la /var/lib/clamav

total 343504

drwxr-xr-x 1 vscan vscan 84 Jan 14 20:27 .

drwxr-xr-x 1 root root 534 Jan 11 12:08 ..

-rw-r--r-- 1 vscan vscan 293670 Jan 11 12:36 bytecode.cvd

-rw-r--r-- 1 vscan vscan 180965376 Jan 14 10:29 daily.cld

-rw-r--r-- 1 vscan vscan 69 Jan 11 12:33 freshclam.dat

-rw-r--r-- 1 vscan vscan 170479789 Jan 11 12:35 main.cvd

Why GeckoLinux (and, I assume, openSUSE) is different from Gentoo Linux and *buntu I don’t know, but I wish Linux distributions were consistent in such cases.

This test command did not work:

fitzcarraldo@ion330ht:~> clamdscan --fdpass --move=/home/fitzcarraldo/virus-quarantine /home/fitzcarraldo/eicarcom2.zip

WARNING: Ignoring deprecated option AllowSupplementaryGroups at /etc/clamd.conf:790

/home/fitzcarraldo/eicarcom2.zip: File path check failure: Permission denied. ERROR

/home/fitzcarraldo/eicarcom2.zip: File path check failure: Permission denied. ERROR

----------- SCAN SUMMARY -----------

Infected files: 0

Total errors: 2

Time: 0.003 sec (0 m 0 s)

Start Date: 2022:01:14 20:36:05

End Date: 2022:01:14 20:36:05

Anyway, this is what I did (I am not sure precisely which command or commands below were necessary to get things working):

fitzcarraldo@ion330ht:~> setfacl -Rd -m 'u:vscan:rx' /home/fitzcarraldo

fitzcarraldo@ion330ht:~> sudo setfacl -Rd -m 'u:vscan:rx' /home/fitzcarraldo

fitzcarraldo@ion330ht:~> sudo usermod -a -G fitzcarraldo vscan

fitzcarraldo@ion330ht:~> sudo usermod -a -G vscan fitzcarraldo

fitzcarraldo@ion330ht:~> groups

fitzcarraldo vscan users video lp audio network storage wheel autologin

fitzcarraldo@ion330ht:~> sudo reboot

(This was the reason I installed the package acl I mentioned earlier.)

After the above changes, this test command does work:

fitzcarraldo@ion330ht:~> clamdscan --fdpass --move=/home/fitzcarraldo/virus-quarantine /home/fitzcarraldo/eicarcom2.zip

WARNING: Ignoring deprecated option AllowSupplementaryGroups at /etc/clamd.conf:790

/home/fitzcarraldo/eicarcom2.zip: Win.Test.EICAR_HDB-1 FOUND

/home/fitzcarraldo/eicarcom2.zip: moved to '/home/fitzcarraldo/virus-quarantine/eicarcom2.zip'

----------- SCAN SUMMARY -----------

Infected files: 1

Time: 0.020 sec (0 m 0 s)

Start Date: 2022:01:14 20:41:27

End Date: 2022:01:14 20:41:27

Also the scheme described in my aforementioned 2017 post now works in this installation.

Corrupted filesystem

Not long after I installed GeckoLinux I left the nettop running unattended on several occasions, and a couple of times I returned to find the HDD clicking rapidly (I assume this was the noise of the head continually seeking unsuccessfully), and had to press the machine’s Power switch in order to stop this. As the nettop had been used very little and was in almost new condition, I suspected that the problem was not caused by the HDD but rather by the software installation. I have read about corruption of Btrfs filesystems on several occasions in the past, so I wondered if the problem was caused by Btrfs itself.

I booted the Live pendrive that I had used to install GeckoLinux Rolling LXQt, became the root user (‘sudo su‘) and entered the command ‘btrfs check /dev/sda1‘, which returned no errors. I did some searching on the Web and came across commands such as ‘btrfs check --repair‘ which appeared to be analagous to ‘fsck‘ for other filesystems. It was only later that I found an article ‘How to recover a BTRFS partition‘ with a dire warning about only using that command as a last resort. Before finding that article I ran the following commands:

localhost:/home/linux # btrfs rescue zero-log /dev/sda1

Clearing log on /dev/sda1, previous log_root 0, level 0

localhost:/home/linux # btrfs check --repair /dev/sda1

enabling repair mode

WARNING:

Do not use --repair unless you are advised to do so by a developer

or an experienced user, and then only after having accepted that no

fsck can successfully repair all types of filesystem corruption. Eg.

some software or hardware bugs can fatally damage a volume.

The operation will start in 10 seconds.

Use Ctrl-C to stop it.

10 9 8 7 6 5 4 3 2 1

Starting repair.

Opening filesystem to check...

Checking filesystem on /dev/sda1

UUID: 82a56d4a-a234-4543-8596-99d98b84c581

ERROR: Corrupted fs, no valid METADATA block group found

Darn it! I tried the following command to see if it returned an error message:

localhost:/home/linux # btrfs rescue zero-log /dev/sda1

Clearing log on /dev/sda1, previous log_root 0, level 0

Then I found the aforementioned article ‘How to recover a BTRFS partition’ and entered the following command which the article states can be used to rebuild the filesystem metadata tree:

localhost:/home/linux # btrfs rescue chunk-recover /dev/sda1/

It was very slow, occasionally displaying lines ‘Scanning: <n> in dev0‘, so I didn’t hang around to wait for it to complete. When I came back several hours later I found that it had finished and was displaying the final lines of output in the terminal window:

[...]

Scanning: 14673166336 in dev0

Scanning: 14742372352 in dev0

Scanning: 14813675520 in dev0

Scanning: 14884454400 in dev0

Scanning: 14954708992 in dev0

Scanning: 15025487872 in dev0

Scanning: 15094693888 in dev0

Scanning: 15143624704 in dev0

Scanning: 15213707264 in dev0

Scanning: 15284486144 in dev0

Scanning: DONE in dev0

Check chunks successfully with no orphans

Chunk tree recovered successfully

I then ran the ‘rescue zero-log‘ and ‘check‘ commands again to see if there would be an error message:

localhost:/home/linux # btrfs rescue zero-log /dev/sda1

Clearing log on /dev/sda1, previous log_root 0, level 0

localhost:/home/linux # btrfs check /dev/sda1

Opening filesystem to check...

Checking filesystem on /dev/sda1

UUID: 82a56d4a-a234-4543-8596-99d98b84c581

[1/7] checking root items

Fixed 0 roots.

[2/7] checking extents

No device size related problem found

[3/7] checking free space tree

[4/7] checking fs roots

[5/7] checking only csums items (without verifying data)

[6/7] checking root refs

[7/7] checking quota groups skipped (not enabled on this FS)

found 159583424512 bytes used, no error found

total csum bytes: 155206908

total tree bytes: 614842368

total fs tree bytes: 389431296

total extent tree bytes: 28753920

btree space waste bytes: 131658663

file data blocks allocated: 188270157824

referenced 182537080832

I hoped the filesystem had indeed been repaired. I then rebooted the machine from the HDD and it has been fine ever since.

Minor bug in the applications menu entry YaST Filesystem Snapshots

Preferences menu in GeckoLinux LXQt on my 43-inch TV screen.

The entry ‘Preferences’ > ‘YaST Filesystem Snapshots’ in the LXQt Application Menu would not launch Snapper (there was not even an authentication prompt to enter the root user’s password). All the other menu entries under ‘Preferences’ worked as expected. What made this more perplexing was that ‘Filesystem Snapshots’ in the ‘Miscellaneous’ section of the YaST Control Centre (‘Preferences’ > ‘YaST’ in the LXQt application menu) did launch Snapper, and I believe that selection also uses the desktop configuration file /usr/share/applications/YaST2/org.opensuse.yast.Snapper.desktop).

To check if there was something wrong with the desktop configuration file I copied /usr/share/applications/YaST2/org.opensuse.yast.Snapper.desktop to the Desktop, changed its ownership to fitzcarraldo.fitzcarraldo, right-clicked on it and selected ‘Trust this executable’, and it worked as expected when I double-clicked on it. So why did it not work when selected from the application menu?

I then compared the Snapper desktop file to the other YaST2 desktop files in the directory:

ion330ht:/home/fitzcarraldo # grep Exec /usr/share/applications/YaST2/org.opensuse.yast*

/usr/share/applications/YaST2/org.opensuse.yast.Alternatives.desktop:Exec=/usr/bin/xdg-su -c "/sbin/yast2 alternatives"

/usr/share/applications/YaST2/org.opensuse.yast.Bootloader.desktop:Exec=xdg-su -c "/sbin/yast2 bootloader"

/usr/share/applications/YaST2/org.opensuse.yast.CheckMedia.desktop:Exec=/sbin/yast2 checkmedia

/usr/share/applications/YaST2/org.opensuse.yast.Disk.desktop:Exec=xdg-su -c "/sbin/yast2 partitioner"

/usr/share/applications/YaST2/org.opensuse.yast.Firewall.desktop:Exec=xdg-su -c "/sbin/yast2 firewall"

/usr/share/applications/YaST2/org.opensuse.yast.Host.desktop:Exec=xdg-su -c "/sbin/yast2 host"

/usr/share/applications/YaST2/org.opensuse.yast.Installation.desktop:Exec=/bin/true

/usr/share/applications/YaST2/org.opensuse.yast.Keyboard.desktop:Exec=xdg-su -c "/sbin/yast2 keyboard"

/usr/share/applications/YaST2/org.opensuse.yast.LAN.desktop:Exec=xdg-su -c "/sbin/yast2 lan"

/usr/share/applications/YaST2/org.opensuse.yast.Language.desktop:Exec=xdg-su -c "/sbin/yast2 language"

/usr/share/applications/YaST2/org.opensuse.yast.NTPClient.desktop:Exec=xdg-su -c "/sbin/yast2 ntp-client"

/usr/share/applications/YaST2/org.opensuse.yast.OnlineUpdateConfiguration.desktop:Exec=/sbin/yast2 online_update_configuration

/usr/share/applications/YaST2/org.opensuse.yast.Printer.desktop:Exec=xdg-su -c "/sbin/yast2 printer"

/usr/share/applications/YaST2/org.opensuse.yast.Proxy.desktop:Exec=xdg-su -c "/sbin/yast2 proxy"

/usr/share/applications/YaST2/org.opensuse.yast.ReleaseNotes.desktop:Exec=/sbin/yast2 inst_release_notes

/usr/share/applications/YaST2/org.opensuse.yast.Remote.desktop:Exec=xdg-su -c "/sbin/yast2 remote"

/usr/share/applications/YaST2/org.opensuse.yast.Security.desktop:Exec=xdg-su -c "/sbin/yast2 security"

/usr/share/applications/YaST2/org.opensuse.yast.ServicesManager.desktop:Exec=xdg-su -c "/sbin/yast2 services-manager"

/usr/share/applications/YaST2/org.opensuse.yast.Snapper.desktop:Exec=/usr/bin/xdg-su -c '/sbin/yast2 snapper'

/usr/share/applications/YaST2/org.opensuse.yast.Sudo.desktop:Exec=xdg-su -c "/sbin/yast2 sudo"

/usr/share/applications/YaST2/org.opensuse.yast.SWSingle.desktop:Exec=xdg-su -c "/sbin/yast2 sw_single"

/usr/share/applications/YaST2/org.opensuse.yast.SWSource.desktop:Exec=xdg-su -c "/sbin/yast2 repositories"

/usr/share/applications/YaST2/org.opensuse.yast.Sysconfig.desktop:Exec=xdg-su -c "/sbin/yast2 sysconfig"

/usr/share/applications/YaST2/org.opensuse.yast.Timezone.desktop:Exec=xdg-su -c "/sbin/yast2 timezone"

/usr/share/applications/YaST2/org.opensuse.yast.Upgrade.desktop:Exec=/bin/true

/usr/share/applications/YaST2/org.opensuse.yast.Users.desktop:Exec=xdg-su -c "/sbin/yast2 users"

To get the LXQt application menu item ‘Preferences’ > ‘YaST Filesystem Snapshots’ to work I had to do the following:

1. Edit /usr/share/applications/YaST2/org.opensuse.yast.Snapper.desktop and change the following line:

Exec=/usr/bin/xdg-su -c '/sbin/yast2 snapper'

to:

Exec=xdg-su -c "/sbin/yast2 snapper"

2. Run the following command (as root user):

ion330ht:/home/fitzcarraldo # update-desktop-database /usr/share/applications

The file org.opensuse.yast.Alternatives.desktop contains Exec=/usr/bin/xdg-su -c "/sbin/yast2 alternatives" and works, and xdg-su is indeed in the directory /usr/bin/, so I don’t know why the original Snapper desktop file would not work from the LXQt application menu. Anyway, the modified file works, although I could have done without wasting several hours trying to fix the problem, even though it was an inconvenience rather than a show-stopper.

Applications Menu entries for YaST

I personally found the large number of YaST entries in the Application Menu confusing and unecessary (see the screenshots above and below). It also looks cluttered. The individual YaST entries can also be accessed via ‘Preferences’ > ‘YaST Control Center’, so a shorter menu could have been implemented instead. Also, the three entries ‘Other’ > ‘YaST Software’, ‘Preferences’ > ‘YaST Software’ and ‘Preferences’ > ‘YaST Software Management’ all do the same thing.

Other menu in GeckoLinux LXQt on my 43-inch TV screen.

ASRock CIR MCE Remote

I should point out that I tinkered with the infrared remote to scratch an itch, because the Rii i8 mini wireless keyboard with touchpad is far superior to a CIR MCE remote and can be used to control the Desktop Environment and any application, including KODI, with ease. MCE remotes are a pain in the neck to configure. The KODI Wiki states: “MCE Remotes – Infrared remote controls made for computers that follow the MCE standard. These remotes should work with Kodi out-of-the-box on Windows and Linux.” Good luck with that!

Anyway, the following are useful background reading on configuring Linux to use infrared remotes:

Here is what I had to do to configure GeckoLinux to recognise the ASRock MCE remote:

1. Ensure the IR receiver is enabled in the ASRock ION 330HT BIOS.

2. Do not install lirc. If it is installed, uninstall it and any associated LIRC packages (except liblirc_client0 which is a dependency of vlc in GeckoLinux/openSUSE, unless you don’t want VLC).

3. The nuvoton-cir module should be loaded automatically at boot if the IR receiver is enabled in the BIOS:

ion330ht:/home/fitzcarraldo # lsmod | grep nuvoton

nuvoton_cir 32768 0

rc_core 65536 6 ir_rc6_decoder,rc_rc6_mce,cec,ir_rc5_decoder,nuvoton_cir

ion330ht:/home/fitzcarraldo # lsmod | grep lirc

ion330ht:/home/fitzcarraldo #

4. Install the package v4l-utils to install the ir-keytable utility, the files /etc/rc_maps.cfg, /lib/udev/rc_keymaps/*.toml and /usr/lib/udev/rules.d/70-infrared.rules

5. Enter the command ‘ir-keytable‘ and you should see some output similar to the following:

ion330ht:/home/fitzcarraldo # ir-keytable

Found /sys/class/rc/rc0/ with:

Name: Nuvoton w836x7hg Infrared Remote Transceiver

Driver: nuvoton-cir

Default keymap: rc-rc6-mce

Input device: /dev/input/event6

LIRC device: /dev/lirc0

Supported kernel protocols: lirc rc-5 rc-5-sz jvc sony nec sanyo mce_kbd rc-6 sharp xmp imon rc-mm

Enabled kernel protocols: lirc rc-6

bus: 25, vendor/product: 1050:00b4, version: 0x0073

Repeat delay = 500 ms, repeat period = 125 ms

6. Enter the command ‘ir-keytable -t‘ and press some keys on the remote. You should see something like the following:

ion330ht:/home/fitzcarraldo # ir-keytable -t

Testing events. Please, press CTRL-C to abort.

297.938077: lirc protocol(rc6_mce): scancode = 0x800f0401

297.938119: event type EV_MSC(0x04): scancode = 0x800f0401

297.938119: event type EV_KEY(0x01) key_down: KEY_NUMERIC_1(0x0201)

297.938119: event type EV_SYN(0x00).

298.154989: event type EV_KEY(0x01) key_up: KEY_NUMERIC_1(0x0201)

298.154989: event type EV_SYN(0x00).

301.628475: lirc protocol(rc6_mce): scancode = 0x800f0402 toggle=1

301.628516: event type EV_MSC(0x04): scancode = 0x800f0402

301.628516: event type EV_KEY(0x01) key_down: KEY_NUMERIC_2(0x0202)

301.628516: event type EV_SYN(0x00).

301.846981: event type EV_KEY(0x01) key_up: KEY_NUMERIC_2(0x0202)

301.846981: event type EV_SYN(0x00).

307.577177: lirc protocol(rc6_mce): scancode = 0x800f0422

307.577219: event type EV_MSC(0x04): scancode = 0x800f0422

307.577219: event type EV_KEY(0x01) key_down: KEY_OK(0x0160)

307.577219: event type EV_SYN(0x00).

307.725639: lirc protocol(rc6_mce): scancode = 0x800f0422

307.725671: event type EV_MSC(0x04): scancode = 0x800f0422

307.725671: event type EV_SYN(0x00).

307.943009: event type EV_KEY(0x01) key_up: KEY_OK(0x0160)

307.943009: event type EV_SYN(0x00).

311.272866: lirc protocol(rc6_mce): scancode = 0x800f040d toggle=1

311.272930: event type EV_MSC(0x04): scancode = 0x800f040d

311.272930: event type EV_KEY(0x01) key_down: KEY_MEDIA(0x00e2)

311.272930: event type EV_SYN(0x00).

311.420857: lirc protocol(rc6_mce): scancode = 0x800f040d toggle=1

311.420900: event type EV_MSC(0x04): scancode = 0x800f040d

311.420900: event type EV_SYN(0x00).

311.638978: event type EV_KEY(0x01) key_up: KEY_MEDIA(0x00e2)

311.638978: event type EV_SYN(0x00).

^C

7. Check the file /etc/rc_maps.cfg exists and includes the following line:

* rc-rc6-mce rc6_mce.toml

ion330ht:/home/fitzcarraldo # cat /etc/rc_maps.cfg

#

# Keymaps table

#

# This table creates an association between a keycode file and a kernel

# driver. It can be used to automatically override a keycode definition.

#

# Although not yet tested, it is mented to be added at udev.

#

# To use, you just need to run:

# ./ir-keytable -a

#

# Or, if the remote is not the first device:

# ./ir-keytable -a -s rc1 # for RC at rc1

#

# Format:

# driver - name of the driver provided via uevent - use * for any driver

# table - RC keymap table, provided via uevent - use * for any table

# file - file name. If directory is not specified, it will default to

# /etc/rc_keymaps.

# For example:

# driver table file

# cx8800 * ./keycodes/rc5_hauppauge_new.toml

# * rc-avermedia-m135a-rm-jx ./keycodes/kworld_315u.toml

# saa7134 rc-avermedia-m135a-rm-jx ./keycodes/keycodes/nec_terratec_cinergy_xs.toml

# em28xx * ./keycodes/kworld_315u.toml

# * * ./keycodes/rc5_hauppauge_new.toml

# Table to automatically load the rc maps for the bundled IR's provided with the

# devices supported by the linux kernel

#driver table file

* rc-adstech-dvb-t-pci adstech_dvb_t_pci.toml

* rc-alink-dtu-m alink_dtu_m.toml

* rc-anysee anysee.toml

* rc-apac-viewcomp apac_viewcomp.toml

* rc-astrometa-t2hybrid astrometa_t2hybrid.toml

* rc-asus-pc39 asus_pc39.toml

* rc-asus-ps3-100 asus_ps3_100.toml

* rc-ati-tv-wonder-hd-600 ati_tv_wonder_hd_600.toml

* rc-ati-x10 ati_x10.toml

* rc-avermedia-a16d avermedia_a16d.toml

* rc-avermedia-cardbus avermedia_cardbus.toml

* rc-avermedia-dvbt avermedia_dvbt.toml

* rc-avermedia-m135a avermedia_m135a.toml

* rc-avermedia-m733a-rm-k6 avermedia_m733a_rm_k6.toml

* rc-avermedia-rm-ks avermedia_rm_ks.toml

* rc-avermedia avermedia.toml

* rc-avertv-303 avertv_303.toml

* rc-azurewave-ad-tu700 azurewave_ad_tu700.toml

* rc-beelink-gs1 beelink_gs1.toml

* rc-behold-columbus behold_columbus.toml

* rc-behold behold.toml

* rc-budget-ci-old budget_ci_old.toml

* rc-cec cec.toml

* rc-cinergy-1400 cinergy_1400.toml

* rc-cinergy cinergy.toml

* rc-ct-90405 ct_90405.toml

* rc-d680-dmb d680_dmb.toml

* rc-delock-61959 delock_61959.toml

* rc-dib0700-nec dib0700_nec.toml

* rc-dib0700-rc5 dib0700_rc5.toml

* rc-digitalnow-tinytwin digitalnow_tinytwin.toml

* rc-digittrade digittrade.toml

* rc-dm1105-nec dm1105_nec.toml

* rc-dntv-live-dvb-t dntv_live_dvb_t.toml

* rc-dntv-live-dvbt-pro dntv_live_dvbt_pro.toml

* rc-dtt200u dtt200u.toml

* rc-dvbsky dvbsky.toml

* rc-dvico-mce dvico_mce.toml

* rc-dvico-portable dvico_portable.toml

* rc-em-terratec em_terratec.toml

* rc-encore-enltv-fm53 encore_enltv_fm53.toml

* rc-encore-enltv encore_enltv.toml

* rc-encore-enltv2 encore_enltv2.toml

* rc-evga-indtube evga_indtube.toml

* rc-eztv eztv.toml

* rc-flydvb flydvb.toml

* rc-flyvideo flyvideo.toml

* rc-fusionhdtv-mce fusionhdtv_mce.toml

* rc-gadmei-rm008z gadmei_rm008z.toml

* rc-geekbox geekbox.toml

* rc-genius-tvgo-a11mce genius_tvgo_a11mce.toml

* rc-gotview7135 gotview7135.toml

* rc-hauppauge hauppauge.toml

* rc-hisi-poplar hisi_poplar.toml

* rc-hisi-tv-demo hisi_tv_demo.toml

* rc-imon-mce imon_mce.toml

* rc-imon-pad imon_pad.toml

* rc-imon-rsc imon_rsc.toml

* rc-iodata-bctv7e iodata_bctv7e.toml

* rc-it913x-v1 it913x_v1.toml

* rc-it913x-v2 it913x_v2.toml

* rc-kaiomy kaiomy.toml

* rc-khadas khadas.toml

* rc-khamsin khamsin.toml

* rc-kworld-315u kworld_315u.toml

* rc-kworld-pc150u kworld_pc150u.toml

* rc-kworld-plus-tv-analog kworld_plus_tv_analog.toml

* rc-leadtek-y04g0051 leadtek_y04g0051.toml

* rc-lme2510 lme2510.toml

* rc-manli manli.toml

* rc-mecool-kii-pro mecool_kii_pro.toml

* rc-mecool-kiii-pro mecool_kiii_pro.toml

* rc-medion-x10-digitainer medion_x10_digitainer.toml

* rc-medion-x10-or2x medion_x10_or2x.toml

* rc-medion-x10 medion_x10.toml

* rc-minix-neo minix_neo.toml

* rc-msi-digivox-ii msi_digivox_ii.toml

* rc-msi-digivox-iii msi_digivox_iii.toml

* rc-msi-tvanywhere-plus msi_tvanywhere_plus.toml

* rc-msi-tvanywhere msi_tvanywhere.toml

* rc-nebula nebula.toml

* rc-nec-terratec-cinergy-xs nec_terratec_cinergy_xs.toml

* rc-norwood norwood.toml

* rc-npgtech npgtech.toml

* rc-odroid odroid.toml

* rc-pctv-sedna pctv_sedna.toml

* rc-pine64 pine64.toml

* rc-pinnacle-color pinnacle_color.toml

* rc-pinnacle-grey pinnacle_grey.toml

* rc-pinnacle-pctv-hd pinnacle_pctv_hd.toml

* rc-pixelview-002t pixelview_002t.toml

* rc-pixelview-mk12 pixelview_mk12.toml

* rc-pixelview-new pixelview_new.toml

* rc-pixelview pixelview.toml

* rc-powercolor-real-angel powercolor_real_angel.toml

* rc-proteus-2309 proteus_2309.toml

* rc-purpletv purpletv.toml

* rc-pv951 pv951.toml

* rc-rc6-mce rc6_mce.toml

* rc-real-audio-220-32-keys real_audio_220_32_keys.toml

* rc-reddo reddo.toml

* rc-snapstream-firefly snapstream_firefly.toml

* rc-streamzap streamzap.toml

* rc-su3000 su3000.toml

* rc-tanix-tx3mini tanix_tx3mini.toml

* rc-tanix-tx5max tanix_tx5max.toml

* rc-tbs-nec tbs_nec.toml

* rc-technisat-ts35 technisat_ts35.toml

* rc-technisat-usb2 technisat_usb2.toml

* rc-terratec-cinergy-c-pci terratec_cinergy_c_pci.toml

* rc-terratec-cinergy-s2-hd terratec_cinergy_s2_hd.toml

* rc-terratec-cinergy-xs terratec_cinergy_xs.toml

* rc-terratec-slim-2 terratec_slim_2.toml

* rc-terratec-slim terratec_slim.toml

* rc-tevii-nec tevii_nec.toml

* rc-tivo tivo.toml

* rc-total-media-in-hand-02 total_media_in_hand_02.toml

* rc-total-media-in-hand total_media_in_hand.toml

* rc-trekstor trekstor.toml

* rc-tt-1500 tt_1500.toml

* rc-twinhan-dtv-cab-ci twinhan_dtv_cab_ci.toml

* rc-twinhan1027 twinhan_vp1027_dvbs.toml

* rc-vega-s9x vega_s9x.toml

* rc-videomate-k100 videomate_k100.toml

* rc-videomate-s350 videomate_s350.toml

* rc-videomate-tv-pvr videomate_tv_pvr.toml

* rc-videostrong-kii-pro kii_pro.toml

* rc-wetek-hub wetek_hub.toml

* rc-wetek-play2 wetek_play2.toml

* rc-winfast-usbii-deluxe winfast_usbii_deluxe.toml

* rc-winfast winfast.toml

* rc-x96max x96max.toml

* rc-xbox-dvd xbox_dvd.toml

* rc-zx-irdec zx_irdec.toml

# * * af9005.toml # found in af9005-remote.c

# * * az6027.toml # found in az6027.c

# * * cinergyt2.toml # found in cinergyT2-core.c

# * * dibusb.toml # found in dibusb-common.c

# * * digitv.toml # found in digitv.c

# * * megasky.toml # found in m920x.c

# * * tvwalkertwin.toml # found in m920x.c

# * * pinnacle310e.toml # found in m920x.c

# * * haupp.toml # found in nova-t-usb2.c

# * * opera1.toml # found in opera1.c

# * * vp702x.toml # found in vp702x.c

8. Copy the file /lib/udev/rc_keymaps/rc6_mce.toml to /etc/rc_keymaps/rc6_mce.toml and edit the latter. For example:

[[protocols]]

name = "rc6_mce"

protocol = "rc6"

variant = "rc6_mce"

[protocols.scancodes]

0x800f0400 = "KEY_KP0"

0x800f0401 = "KEY_KP1"

0x800f0402 = "KEY_KP2"

0x800f0403 = "KEY_KP3"

0x800f0404 = "KEY_KP4"

0x800f0405 = "KEY_KP5"

0x800f0406 = "KEY_KP6"

0x800f0407 = "KEY_KP7"

0x800f0408 = "KEY_KP8"

0x800f0409 = "KEY_KP9"

0x800f040a = "KEY_DELETE"

0x800f040b = "KEY_ENTER"

0x800f040c = "KEY_SLEEP" # Power

0x800f040d = "KEY_MEDIA" # Left Meta, Start

0x800f040e = "KEY_MUTE"

0x800f040f = "KEY_I" # Info

0x800f0410 = "KEY_VOLUMEUP" # Volume Up

0x800f0411 = "KEY_VOLUMEDOWN" # Volume Down

0x800f0412 = "KEY_CHANNELUP"

0x800f0413 = "KEY_CHANNELDOWN"

0x800f0414 = "KEY_FORWARD" # Fast forward

0x800f0415 = "KEY_REWIND" # Rewind

0x800f0416 = "KEY_PLAY"

0x800f0417 = "KEY_RECORD"

0x800f0418 = "KEY_PLAYPAUSE" # Was KEY_PLAY but didn't pause in Clementine

0x800f0419 = "KEY_STOP"

0x800f041a = "KEY_NEXTSONG" # Skip Next

0x800f041b = "KEY_PREVIOUSSONG" # Skip Previous

0x800f041c = "KEY_NUMERIC_POUND"

0x800f041d = "KEY_NUMERIC_STAR"

0x800f041e = "KEY_UP"

0x800f041f = "KEY_DOWN"

0x800f0420 = "KEY_LEFT"

0x800f0421 = "KEY_RIGHT"

0x800f0422 = "KEY_ENTER" # OK

0x800f0423 = "KEY_BACKSPACE" # Back / Exit

0x800f0424 = "KEY_DVD"

0x800f0425 = "KEY_TUNER"

0x800f0426 = "KEY_EPG"

0x800f0427 = "KEY_ZOOM"

0x800f043a = "KEY_BRIGHTNESSUP"

0x800f0446 = "KEY_TV"

0x800f0447 = "KEY_AUDIO"

0x800f0448 = "KEY_PVR"

0x800f0449 = "KEY_CAMERA"

0x800f044a = "KEY_VIDEO"

0x800f044c = "KEY_LANGUAGE"

0x800f044d = "KEY_TITLE"

0x800f044e = "KEY_PRINT"

0x800f0450 = "KEY_RADIO"

0x800f045a = "KEY_SUBTITLE"

0x800f045b = "KEY_RED"

0x800f045c = "KEY_GREEN" # Green

0x800f045d = "KEY_YELLOW"

0x800f045e = "KEY_BLUE" # Blue

0x800f0465 = "KEY_POWER2"

0x800f046e = "KEY_PLAYPAUSE"

0x800f046f = "KEY_MEDIA"

0x800f0480 = "KEY_BRIGHTNESSDOWN"

0x800f0481 = "KEY_PLAYPAUSE"

9. Run the following command to load the edited keymap and check that it works:

ion330ht:/home/fitzcarraldo # ir-keytable -c -w /etc/rc_keymaps/rc6_mce.toml

Read rc6_mce table

Old keytable cleared

Wrote 60 keycode(s) to driver

Protocols changed to rc-6

By the way, adding ‘-p RC-5,RC-6‘ to that command would select the rc-5 and rc-6 protocols:

ion330ht:/home/fitzcarraldo # ir-keytable -c -p RC-5,RC-6 -w /etc/rc_keymaps/rc6_mce.toml

Read rc6_mce table

Old keytable cleared

Wrote 60 keycode(s) to driver

Protocols changed to rc-5 rc-6

10. Check that the protocols have been enabled and the keymap loaded:

ion330ht:/home/fitzcarraldo # ir-keytable

Found /sys/class/rc/rc0/ with:

Name: Nuvoton w836x7hg Infrared Remote Transceiver

Driver: nuvoton-cir

Default keymap: rc-rc6-mce

Input device: /dev/input/event7

LIRC device: /dev/lirc0

Supported kernel protocols: lirc rc-5 rc-5-sz jvc sony nec sanyo mce_kbd rc-6 sharp xmp imon rc-mm

Enabled kernel protocols: lirc rc-6

bus: 25, vendor/product: 1050:00b4, version: 0x0073

Repeat delay = 500 ms, repeat period = 125 ms

11. Reboot.

12. Check that the modified keymap has been loaded:

ion330ht:/home/fitzcarraldo # ir-keytable

Found /sys/class/rc/rc0/ with:

Name: Nuvoton w836x7hg Infrared Remote Transceiver

Driver: nuvoton-cir

Default keymap: rc-rc6-mce

Input device: /dev/input/event7

LIRC device: /dev/lirc0

Supported kernel protocols: lirc rc-5 rc-5-sz jvc sony nec sanyo mce_kbd rc-6 sharp xmp imon rc-mm

Enabled kernel protocols: lirc rc-6

bus: 25, vendor/product: 1050:00b4, version: 0x0073

Repeat delay = 500 ms, repeat period = 125 ms

ion330ht:/home/fitzcarraldo # ir-keytable -t

Testing events. Please, press CTRL-C to abort.

1392.769850: lirc protocol(rc6_mce): scancode = 0x800f040d toggle=1

1392.769898: event type EV_MSC(0x04): scancode = 0x800f040d

1392.769898: event type EV_KEY(0x01) key_down: KEY_MEDIA(0x002e)

1392.769898: event type EV_SYN(0x00).

c1392.917858: lirc protocol(rc6_mce): scancode = 0x800f040d toggle=1

1392.917899: event type EV_MSC(0x04): scancode = 0x800f040d

1392.917899: event type EV_SYN(0x00).

1393.137843: event type EV_KEY(0x01) key_up: KEY_MEDIA(0x002e)

1393.137843: event type EV_SYN(0x00).

1409.275700: lirc protocol(rc6_mce): scancode = 0x800f0418

1409.275756: event type EV_MSC(0x04): scancode = 0x800f0418

1409.275756: event type EV_KEY(0x01) key_down: KEY_PLAYPAUSE(0x00a4)

1409.275756: event type EV_SYN(0x00).

1409.425095: lirc protocol(rc6_mce): scancode = 0x800f0418

1409.425131: event type EV_MSC(0x04): scancode = 0x800f0418

1409.425131: event type EV_SYN(0x00).

1409.641846: event type EV_KEY(0x01) key_up: KEY_PLAYPAUSE(0x00a4)

1409.641846: event type EV_SYN(0x00).

1411.757874: lirc protocol(rc6_mce): scancode = 0x800f0418 toggle=1

1411.757928: event type EV_MSC(0x04): scancode = 0x800f0418

1411.757928: event type EV_KEY(0x01) key_down: KEY_PLAYPAUSE(0x00a4)

1411.757928: event type EV_SYN(0x00).

1411.907269: lirc protocol(rc6_mce): scancode = 0x800f0418 toggle=1

1411.907296: event type EV_MSC(0x04): scancode = 0x800f0418

1411.907296: event type EV_SYN(0x00).

1412.125848: event type EV_KEY(0x01) key_up: KEY_PLAYPAUSE(0x00a4)

1412.125848: event type EV_SYN(0x00).

^C

However, not all the keys on the ASRock remote work in KODI when using the key names in the file rc6_mce.toml listed above. I might have been able to change some of the key names in the file to see if they would have the desired effect in KODI, but it is not worth the hassle when my Rii i8 wireless mini keyboard works perfectly with KODI, all other apps, and the Linux Desktop. I find KODI unintuitive in any case, so there is even less incentive to tinker further with the ASRock CIR MCE remote.

Furthermore, I have now disabled the CIR port in the BIOS because I found that sometimes the nettop was resuming from suspension without me triggering it from either the ASRock CIR MCE remote or the Rii i8 mini keyboard. ‘Boot From Onboard LAN’ is not enabled in the BIOS, so that was not the cause.

Disabling the nettop’s LEDs

The nettop is on my TV stand and its Power LED, LAN LED and SATA LED could become annoying, especially the blinking power LED when the nettop is in Suspend mode, so I disabled these in the BIOS (‘Good Night LED’ is Enabled to turn them all off).

Conclusion

After over a decade I am actually using the ASRock ION 330HT nettop and have it connected to my 43-inch TV so that I can browse the Web properly from the comfort of my sofa and play all my FLAC (and MP3, OGG etc.) music files through the sound bar also connected to my TV. The Rii i8 wireless mini keyboard/touchpad works perfectly with the nettop, so the ASRock CIR MCE remote is redundant. Although I have a dedicated DVD player connected to the TV via a Composite Video cable, the nettop is connected via an HDMI cable so the image is nice and sharp.

GeckoLinux Rolling LXQt performs well on the nettop, and looks polished and crisp on the TV screen. I like it a lot so far. Other machines in my home network can browse SMB shares on the nettop, and vice versa, and the nettop can also be accessed using SSH. I need to become familiar with the package manager (GUI and command line) but have not had any trouble so far. The Btrfs filesystem ‘hiccup’ I mentioned earlier worries me a little, but I have had no further trouble since I repaired the filesystem. And I have actually used Snapper a couple of times to recover files I deleted too hastily. So GeckoLinux gets a thumbs up from me.